Performance Optimization Campaign Pipeline Template

Turn vague perf complaints into a clear campaign

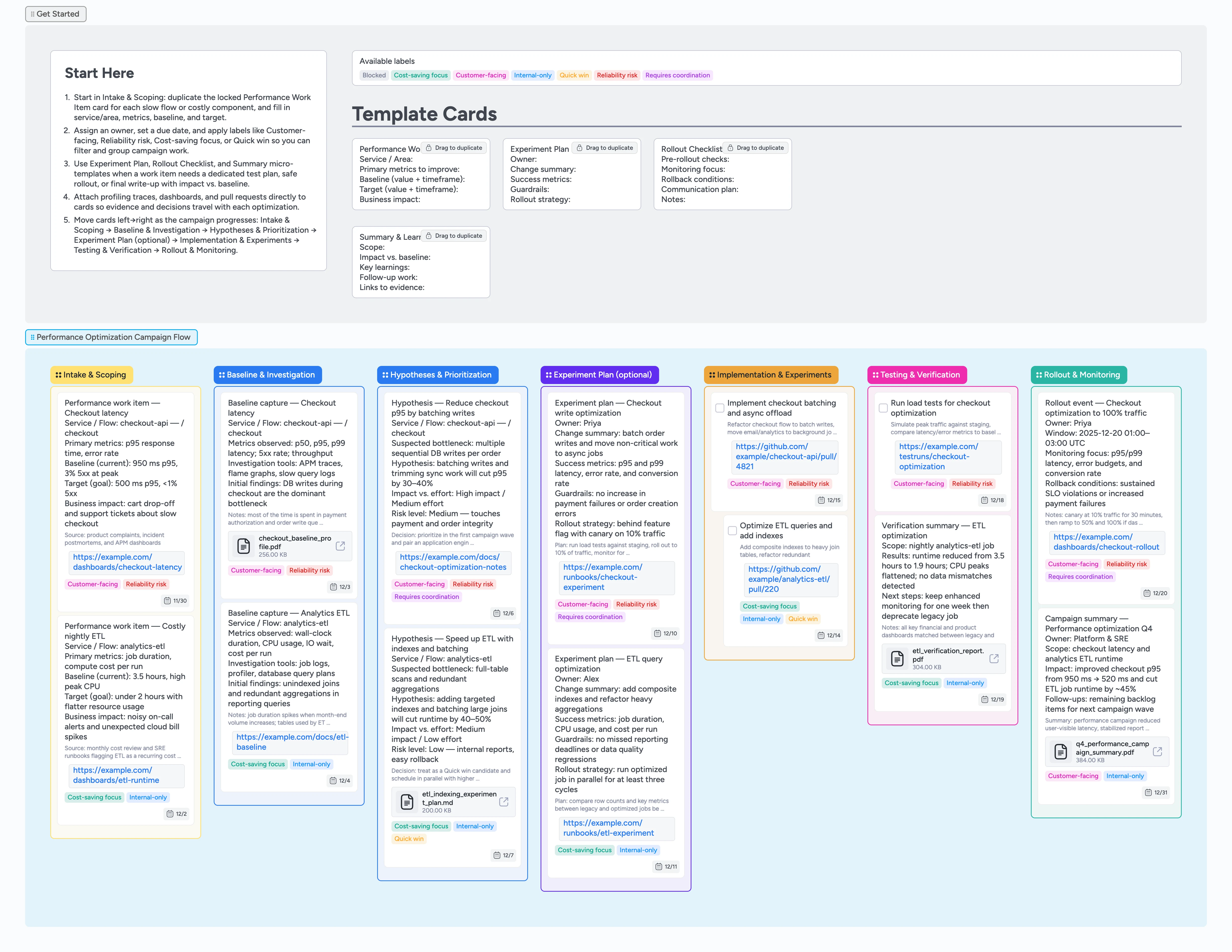

Instead of chasing one-off "the app feels slow" reports, this template turns performance work into a visible, repeatable campaign board the whole team can scan to see bottlenecks, owners, and impact. Start by duplicating the Performance Work Item micro-template so every bottleneck has fields for service or area, metrics, baseline, target, and business impact ready to fill. Use labels like Customer-facing, Reliability risk, Cost-saving focus, Quick win, and Requires coordination so you can slice the board during standups or perf reviews. When a change is risky, duplicate the Experiment Plan card, attach traces, dashboards, or RFCs, and spell out success metrics and guardrails. As cards move from Intake & Scoping through Rollout & Monitoring, everyone sees where performance work sits, what is blocked, and what impact you have already unlocked.

- Capture every bottleneck as a card with metrics, owners, and labels in one board

- Baseline and track p95 latency, error rate, and cost by filling card fields and updating them as experiments complete

- Use micro-templates to design experiments, rollouts, and summaries consistently

- Make owners, labels, and due dates obvious during performance planning

- Keep traces, dashboards, and reports attached to each optimization card

Start in Intake & Scoping — log perf work items

In the Performance Optimization Campaign Flow, start in the Intake & Scoping column. Duplicate the Performance Work Item micro-template card, then drag the new card into this list. Fill in Service / Area, Primary metrics to improve, Baseline (value + timeframe), Target (value + timeframe), and Business impact so anyone can quickly understand why this optimization matters. Assign an owner and set a due date in the card header so the work has a clear accountable person and timeline. Attach initial evidence such as dashboards or traces as links or files on the card. Apply labels like Customer-facing, Internal-only, Reliability risk, or Cost-saving focus so you can filter and group work as the campaign grows.

Pro tip: Keep the Intake & Scoping column open during incident reviews or planning meetings so new performance work items land in a single place.

Baseline and investigate before you change anything

Once someone is actively digging into a card, move it into Baseline & Investigation. Use the description to capture the measurement window, tools you used, and a short summary of what you found so far. Attach profiling traces, slow query plans, or screenshots of relevant dashboards directly to the card so future readers do not have to re-run the same investigation. If you want input from others, add a quick note in the card so teammates see what you measured and when. If you uncover multiple related bottlenecks, duplicate the Performance Work Item micro-template to create a separate card for each and group them visually in the column. Update labels as you refine your understanding, for example by adding Reliability risk to a card that clearly threatens SLOs.

Shape hypotheses and experiments with micro-templates

When you are ready to propose changes, drag cards into Hypotheses & Prioritization and summarize the suspected bottleneck, hypothesis, and impact vs. effort directly in the card. Use the card description or a short checklist in the notes area to outline the hypothesis, expected impact, and rough effort so the team can compare work items side by side. Use labels like Quick win or Requires coordination to flag which optimizations can ship quickly and which need cross-team alignment. For higher-risk items, move the card into Experiment Plan (optional) and duplicate the Experiment Plan micro-template. Fill its fields with change summary, success metrics, guardrails, and rollout strategy so everyone understands how you will test safely. Mention teammates and attach design docs or RFCs on the same card instead of scattering context across tools.

Pro tip: Use one Experiment Plan card per high-impact work item so you can reuse the structure across multiple campaigns.

Implement, test, and verify changes end-to-end

As work starts, move cards into Implementation & Experiments and turn them into task cards if you want a checkbox to mark when implementation is complete. Attach pull requests, feature flag links, or configuration diffs so reviewers can jump straight from the board into the change. Use indentation to nest supporting tasks, such as adding indexes or tuning cache settings, under the primary work item. When changes are ready to validate, drag cards into Testing & Verification and record load test results, before-and-after metrics, and any regressions caught. If something gets stuck, apply the Blocked label and note why in the description so it is obvious what is holding the work back.

Roll out, monitor, and capture learnings

When a change is ready for production, move its card into Rollout & Monitoring. Duplicate the Rollout Checklist micro-template to spell out pre-rollout checks, monitoring focus, rollback conditions, and communication steps in one place. During the rollout window, keep dashboards open and add quick notes or screenshots to the card so the history of what you observed stays attached. After the observation window, duplicate Summary & Learnings, fill in scope, impact vs. baseline, key learnings, and follow-up items, and attach any final reports. Leave these summary cards in the Rollout & Monitoring column so future performance campaigns can reference what worked and what did not.

Pro tip: At the end of the quarter, scan the Rollout & Monitoring column and export it as a lightweight performance campaign report.

What’s inside

Seven-stage performance campaign flow

Intake & Scoping, Baseline & Investigation, Hypotheses & Prioritization, Experiment Plan (optional), Implementation & Experiments, Testing & Verification, and Rollout & Monitoring — move cards left-to-right so engineering and SRE leads can see where work is stuck without digging through separate tickets.

Micro-templates for perf work

Performance Work Item, Experiment Plan, Rollout Checklist, and Summary & Learnings cards are ready to duplicate so every optimization captures metrics, hypotheses, rollout steps, and impact in a consistent structure.

Labels tuned for risk and focus

Customer-facing, Internal-only, Reliability risk, Cost-saving focus, Quick win, Requires coordination, and Blocked labels make it easy to filter by user impact, risk, and status when you apply them during intake and use board filters during standups or post-incident reviews.

Demo cards for real-world bottlenecks

Filled example cards show a slow checkout flow and a heavy analytics ETL job with baselines, targets, assignees, labels, and attached evidence so teams can copy a solid example instead of starting from a blank card.

Summary lane for campaign results

The Rollout & Monitoring column includes a campaign summary card that captures scope, final impact, and follow-up items, giving you a lightweight record of what this performance campaign achieved.

Why this works

- Turns vague performance complaints into structured work items with clear metrics and owners

- Keeps hypotheses, experiments, and rollout plans attached to the same cards as code changes

- Helps teams balance quick wins against higher-risk work using labels and stages

- Makes campaign progress visible at a glance by moving cards left-to-right across stages

- Creates a reusable performance campaign pattern you can clone for future optimization cycles

FAQ

Can we use this for both web and backend services?

Yes. Treat each card as a performance work item for a specific endpoint, job, or component, and fill in the metrics and impact that matter for that system.

How does this work with our existing APM and dashboards?

Keep instrumentation where it already lives, then attach key dashboards, traces, and reports as links or files to cards so the board becomes the coordination layer, not another monitoring tool.

Is this overkill for a small engineering team?

For teams of one to five engineers, the template keeps performance work from getting buried under feature work by making goals, owners, and status visual without adding heavyweight process.

Can we reuse the board for future performance campaigns?

Yes. When a campaign ends, keep the summary cards in Rollout & Monitoring as a record, duplicate the board, clear old work items, and start a new cycle with the same structure and micro-templates.